Objectives

- Centralized backup solution for our company

- Automated backup of servers and workstations

- Two complete offline storage copies rotating weekly, bootable in any PC

- At least one offline copy at any time located outside of the company

The Offline Backups – Principle of Operation

Our backup server has been based on BackupPC since 2004. Throughout the years we have only upgraded the HW, performed debian stable dist-upgrades, and changed the underlying storage layers. We are still running on the original XFS partition, copied over to new drives and resized many times to satisfy the ever-growing backup requirements. Currently, our backup server (orfeus) runs on inexpensive but so far very reliable HP ML115 (dual core AMD Opteron, 8GB RAM) with total space 4,2TB (1.5TB + 3TB drives) utilized at 60%.

The Perils of Offline Copies

We tested several technologies before reaching our current version:

LVM Snapshot – Too Slow

Unfortunately, at that time we were running on SW Raid 5 without aligned raid/LVM/filesystem blocks. Creating the LVM snapshot caused the write speed of RAID5 drop down to unacceptable 2-5 MB/s. Later I learned about the seek overhead the snapshot brought (8x ?).

Copying the Raw Partition – Keeps the Backup Software Down for Too Long

This setup required having backuppc shut down while copying by dd the partition to another machine via gigabit link. Unfortunately the copying took long since the whole partition had to be copied over for each offline run.

Our Final Solution – Degraded SW Raid1 – Mirrors with Write-Intent Bitmaps

For the main storage we are using SW raid over 4 drives. Each drive holds three partitions (edit: drives larger than 2TB have GPT partion table and to be bootable they have additional sdX1 table – http://www.anchor.com.au/blog/2012/10/booting-large-gpt-disks-without-efi/ ):

- Root filesystem raid1 mirror md1 – made of 4

sdX1/2 - Swap raid1 mirror md2 – made of 4

sdX2/3 - Data partition –

sdX3/4

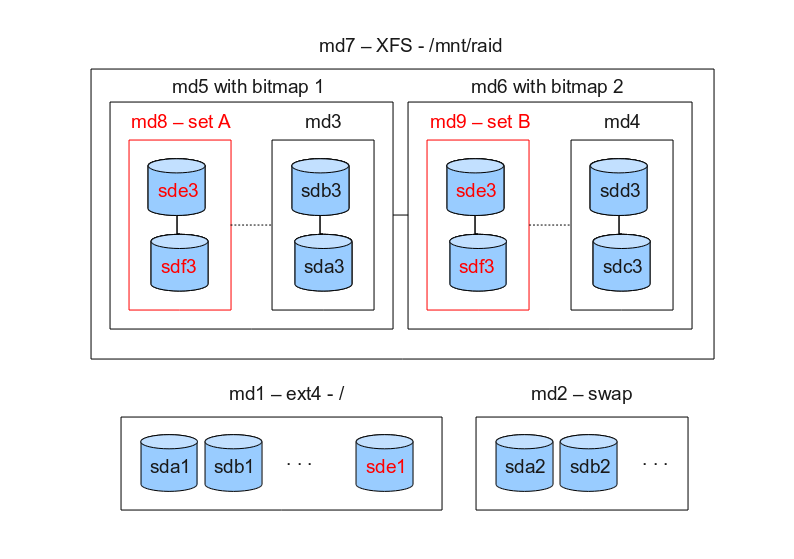

The data partitions are basis for the following structure of Raid0 stripes and Raid1 mirrors:

-

Two pairs of sdX3 partitions constitute two Raid0 stripes

md3(sda3 + sdb3) andmd4(sdc3 + sdd3). -

Each of

md3andmd4provide one half of two-component Raid1 mirrorsmd5andmd6, running in degraded mode under regular operation. The mirrorsmd5andmd6have their write-intent bitmaps enabled (mdadm --bitmap=internal) -

The mirrors

md5andmd6constitute the main Raid1 mirrormd7, formatted with XFS filesystem, mounted to/mnt/raid.

Offline copying

We are weekly rotating two sets of external drives (sde, sdf). They have the same partition layout as their internal counterparts (created by simple sfdisk -d /dev/sdX | sfdisk /dev/sdY). External drives are hooked to the backup server via an additional eSATA controller with hotplug capability. For details see below. Similarly to the internal drives, the data partions of external drives (sde3, sdf3) are components of Raid0 stripe md8 (resp. md9 for the second set). The stripe md8 is the missing part of md5, the other stripe md9 is the missing part of md6. UUID of the md8 stripe fits the md5 mirror, while UUID of md9 corresponds to the md6 mirror. This allows us to take advantage of the automatic raid assembly feature – mdadm -A --scan.

Why using two degraded mirrors (md5, md6)? Do you remember the write-intent bitmaps enabled on these arrays? They provide major reduction in the synchronization time by keeping list of dirty blocks since the last synchronization. Since the bitmap supports only one external component, for two external sets (md8, md9) we have to keep two Raid1 mirrors (md5, md6).

Practical Considerations for the eSATA Controller

It is important to make sure the external drives are not added to the arrays automatically upon startup/reboot. In our experience such situation often led to corrupted arrays when the degraded mirrors were assembled with the external drives instead of the up-to-date internal partitions.

There are several solutions, such as:

- Commenting out the

md8/md9lines in/etc/mdadm/mdadm.confand rebuilding initramfs. - Blacklisting the module for the eSATA controller (

ahciin our case) and loading the module manually in/etc/rc.localat the end of the boot. Blacklisting in/etc/modulesmust be accompanied by regenerating initramfs, otherwise the module gets loaded anyway. For details see http://linux.koolsolutions.com/2009/10/11/howto-blacklisting-kernel-module-from-auto-loading-in-debian/.

Steps when Creating Offline Copies

-

Insert the external SATA drives to eSATA trays, switch their power on (if applicable).

-

Log into the backup server and check that the external drives and their partitions have been properly detected (

/dev/sd[ef][123])-

cat /proc/partitions

-

-

Run the backup script

start-backup.sh– see below.Since the order of drives (which means their names) can change at any boot, the script must not count on fixed names of internal/external drives.

- The script automatically detects which present partitions belong to the external drives.

- It reassembles the external Raid0 (either

md8ormd9, based on their UUID). - The script automatically adds the newly assembled external array to its degraded “parent”

md5ormd6. - The script automatically reconfigures BackupPC to prevent starting new backup jobs. The currently running will be left running to finish. This feature minimizes synchronization time and the consequent time the external drives are online and working – see below.

Backuppc is disabled by modifying its config file (the directive$Conf{BackupsDisable}) and calling

/usr/share/backuppc/bin/BackupPC_serverMesg server reload

Technically, the start-up script is modifying a one-line configexternal-control.pl, included in theconfig.plbydo "/etc/backuppc/external-control.pl"; -

Check the script output for errors, there is

cat /proc/mdstatput at the end of the script for quick convenient check. -

Log off and wait for a few hours until an info mail about backup completion arrives from cron.

-

A simple script

check-and-finish-backup.sh(see below) run by cron every minute checks whether the corresponding array (md5ormd6) is already synchronized (i.e. clean). The script performes two actions: When no backuppc dump job is running anymore, it shuts down backuppc alltogether to speed up the synchronization. In the subsequent runs, when the array is found to be clean, the script does the following sequence: -

shuts down backuppc (just in case)

-

remounts the

md7filesystem read-only, flushes buffers (sync) -

removes the external components (

md8/md9) frommd5/md6 - remounts the

md7filesystem back to read-write, starts backuppc and enables new dumps (see above) -

mounts read-only the external filesystem to a test mount point and checks for presence of a test directory.

-

If an error occurs, the script informs about it and exits. Otherwise, it unmounts the external filesystem, stops the external array

md8/md9, and puts the external drives to sleep (hdparm -Y /dev/sd[ef]). - The operator is informed about the result by cron-generated email. The external drives are spun down now, the backup server is in standard full operation, and the external drives can be physically removed at any time later.

Recovery from the Offline Copies

-

Hook the external drives to any PC.

-

Boot into e.g. Slax LiveCD, no GUI needed

-

Load the raid0 module if needed:

modprobe raid0 -

Mount the Raid1 partition of the original root partition to

/mnt/root:mount -t ext3 /dev/sdX1 /mnt/root .Here we are taking advantage of the fact that filesystem starts from the begining of the partition, while the mdadm raid superblock is written at the end. -

Assemble the data Raid0 stripe:

mdadm -A /dev/md0 /dev/sdX3 /dev/sdY1

-

Check raid status

cat /proc/mdstats -

Mount the

md0array into the backup server filesystem:mount -t xfs /dev/md0 /mnt/root/mnt/raid -

Mount the auxiliary filesystems:

for aux in proc sys; do mount -o bind /$aux /mnt/root/$aux; done -

Chroot into the backup filesystem:

chroot /mnt/root -

While in chroot, start apache, backuppc, do whatever needed.

-

Or umount the backup drives and transfer the partitions by

ddto a new backup server.

Source Code

All of the following files reside in a single directory (/root/backup in our case).

start-backup.sh

The script is manually run by our backup operator after external drives are properly attached, recognized by system, and their presence verified in /proc/partitions.

#!/bin/bash

TIMEOUT=1m

# loading params

source $(dirname $0)/backup.include

# detect external drives, populate env variables used later in this script

if ! detect_drives; then

# drives not detected, trying to reload the module

echo "Removing module ahci"

rmmod ahci || quit "Could not remove module ahci, exiting" 1

echo "Loading module ahci"

modprobe ahci || quit "Could not load module ahci, exiting" 1

WAIT_MODPROBE="5s"

echo "Waiting after modprobe for $WAIT_MODPROBE...";

sleep $WAIT_MODPROBE

fi

# detect external drives, populate env variables used later in this script

detect_drives || exit 1

echo "Assembling the striped array $EXTERNAL_STRIPING"

mdadm -A /dev/$EXTERNAL_STRIPING --scan || quit "Could not assemble the striped array $EXTERNAL_STRIPING, exiting" 1

# waiting a bit for the array to stabilize, otherwise the subsequent ADD fails with "EXTERNAL_STRIPING not large enough to join the DATA_MIRROR"

sleep 2s

echo "Adding the striped array $EXTERNAL_STRIPING to the data mirror $DATA_MIRROR"

mdadm /dev/$DATA_MIRROR --add /dev/$EXTERNAL_STRIPING || quit "Could not add the striped array to the data mirror, exiting" 1

echo "Adding the external root partitions to the root mirror $ROOT_MIRROR"

for PARTITION in $EXTERNAL_ROOT_PARTITIONS; do

mdadm /dev/$ROOT_MIRROR --add /dev/$PARTITION || quit "Could not add the external root partition $PARTITION to the root mirror, exiting" 1

done

# wait a bit for changes to appear in mdstat

sleep 1s

# show us it is working

cat /proc/mdstat

# disable new backups in backuppc

disable_backup

echo "Synchronizing the mirror, i.e. running the backup now, it will take several hours. Thanks."

backup.include

The file contains definitions of key variables and helper functions. It is included (source ...) by the scripts.

ROOT_MIRROR=md1

XFS_MIRROR=md7

# data mirrors must keep the same order as external stripings

DATA_MIRRORS=( md5 md6 )

EXTERNAL_STRIPINGS=( md8 md9 )

BACKUPPC_CTRL_CONF="/etc/backuppc/external-control.pl"

BACKUPPC_CTRL_DISABLE='$Conf{BackupsDisable} = 2;'

BACKUPPC_CTRL_ENABLE='$Conf{BackupsDisable} = 0;'

BACKUPPC_RELOAD_CMD="su backuppc -c '/usr/share/backuppc/bin/BackupPC_serverMesg server reload'"

export OUT_MSG

export SILENT

function quit {

echo "$1"

exit $2

}

# Conditional text output. Usage: out message

# The condition depends on variable $SILENT

function out {

MSG=$1

OUT_MSG="$OUT_MSG\

$MSG"

if [ -z "$SILENT" ]; then

# not silent, output the message

echo $MSG

fi

}

# Detecting external drives and partitions. No parameters

# Output - either set variables EXTERNAL_DEVICES, EXTERNAL_ROOT_PARTITIONS, EXTERNAL_STRIPING and its corresponding DATA_MIRROR, or exit

function detect_drives {

#cleanup

EXTERNAL_ROOT_PARTITIONS=""

EXTERNAL_DEVICES=""

EXTERNAL_STRIPING=""

DATA_MIRROR=""

out "Detecting the external discs"

# for each external striping - read its UUID from mdadm.conf and check whether there is a partion with such mdadm UUID

for (( i = 0 ; i < ${#EXTERNAL_STRIPINGS[@]} ; i++ )); do

E_S=${EXTERNAL_STRIPINGS[$i]}

E_S_UUID=$(grep "ARRAY.*${E_S}" /etc/mdadm/mdadm.conf | sed 's/.*UUID=//g')

# trying to find out if such UUID exists among known partitions, starting with 3. This is to make sure we detect the external drive with the root partition too (there can be external drives with data partitions only, these would be sX1, not sX3)

for PARTITION in $(cat /proc/partitions | awk '{print $4}' | grep 'sd.*[3-9]'); do

if mdadm --examine /dev/$PARTITION 2>/dev/null | grep -q "$E_S_UUID"; then

EXTERNAL_STRIPING=$E_S

#list of external devices

EXTERNAL_DEVICES="$EXTERNAL_DEVICES ${PARTITION/[0-9]/}"

DATA_MIRROR=${DATA_MIRRORS[$i]}

fi

done

# if found, no reason to loop the outer cycle

[ -n "$EXTERNAL_STRIPING" ] && break

done

if [ -z "$EXTERNAL_STRIPING" ]; then

out "Could not find any partition belonging to any of the external stripings. Exiting"

return 1

fi

out "External striping: $EXTERNAL_STRIPING"

out "Corresponding data mirror: $DATA_MIRROR"

# Checking whether the external devices contain at least one root mirror. Otherwise exiting.

ROOT_MIRROR_UUID=$(mdadm --detail /dev/$ROOT_MIRROR 2>/dev/null| grep UUID | awk '{print $3}')

for EXTERNAL_DEVICE in $EXTERNAL_DEVICES; do

# for each external device, check all partitions

for PARTITION in $(cat /proc/partitions | grep $EXTERNAL_DEVICE | awk '{print $4}' ); do

if mdadm --examine /dev/$PARTITION 2>/dev/null | grep -q "$ROOT_MIRROR_UUID"; then

EXTERNAL_ROOT_PARTITIONS="$EXTERNAL_ROOT_PARTITIONS $PARTITION"

fi

done

done

if [ -n "$EXTERNAL_ROOT_PARTITIONS" ]; then

out "External root partitions: $EXTERNAL_ROOT_PARTITIONS"

else

out "Could not find any root partition on the external discs, exiting."

return 1

fi

return 0

}

# Disabling the backuppc - do not start new jobs. No parameters

function disable_backup() {

echo "Disabling backuppc dump runs."

echo "$BACKUPPC_CTRL_DISABLE" > $BACKUPPC_CTRL_CONF

eval $BACKUPPC_RELOAD_CMD

}

# Enabling new jobs in backuppc. No parameters

function enable_backup() {

echo "Enabling backuppc dump runs"

echo "$BACKUPPC_CTRL_ENABLE" > $BACKUPPC_CTRL_CONF

eval $BACKUPPC_RELOAD_CMD

}

check-and-finish-backup.sh

The script is run by cron every minute. It checks whether conditions for finishing the offline backup are met. Specificaly, if the data mirror (md5/md6) is already synchronized, if no backuppc dump is still running, if there are no other running processes we do not want to interrupt. If the conditions are met, the script calls the finish-backup.sh script.

#! /bin/bash

PATH=$PATH:/bin:/sbin/:/usr/bin:/usr/sbin

source $(dirname $0)/backup.include

# checking whether the external arrays/stripings are assembled, otherwise quit

for PARTITION in ${EXTERNAL_STRIPINGS[@]}

do

grep -q $PARTITION /proc/mdstat && FOUND=1 && break

done

if [ -z "$FOUND" ]; then

# found no external stripings active, nothing to do

exit 0

fi

# external stripings found, the external backup drives are present and arrays assembled, continuing

# switching to silent mode - all outputs are stored in OUT_MSG instead. This is for cron to avoid sending information mails each run (i.e. every minute)

SILENT=1

detect_drives || exit 1

# any backuppc dump task running?

if pgrep BackupPC_dump > /dev/null; then

# backuppc dump runs, exiting

exit 0

fi

# no backuppc dump currently running, continue

# check if the data mirror is already synchronized and no backup is currently running

# still degraded ?

if mdadm --detail /dev/$DATA_MIRROR 2>/dev/null| grep -q "State.*degraded"; then

# the data mirrors are still degraded, their synchronization is still running. In order for backuppc to not start the nightly cleaning which slows down the synchronization, let's shut complete backuppc down.

if pgrep BackupPC > /dev/null; then

/etc/init.d/backuppc stop

echo "Shuting down idle backuppc to speed up backup drive synchronization."

fi

# end exiting

exit 0

fi

# checking other tasks accessing the data mirror that we do not want to kill now (dirvish in our case)

pgrep dirvish-cronjob > /dev/null && exit 0

# here we are ready to finish the backup

# output the previous message since we will be sending mail in cron

echo "$OUT_MSG"

# the data mirror is already synchronized, no backup job running, time to finish

$(dirname $0)/finish-backup.sh

finish-backup.sh

The script removes all partitions of the external drives from their corresponding arrays while making sure filesystems are preserved. Afterwards, it re-assembles the data filesystem on external drives alone and runs some simple checks. If everything is OK, it puts the external drives to sleep. The operator is informed about finished backup by mail sent from cron.

#!/bin/sh

source $(dirname $0)/backup.include

# loading params

detect_drives || exit 1

# before removing the external drives, we need flushed filesystem - read-only. Backuppc must be stopped now.

echo "Shutting down backuppc"

/etc/init.d/backuppc stop

sleep 5

echo "Syncing filesystems"

sync

for PARTITION in $EXTERNAL_ROOT_PARTITIONS; do

echo "Removing the external root partition $PARTITION from the root mirror $ROOT_MIRROR"

mdadm /dev/$ROOT_MIRROR --fail /dev/$PARTITION

sleep 1

mdadm /dev/$ROOT_MIRROR --remove /dev/$PARTITION

sleep 1

done

echo "Detecting external drives:"

EXTERNAL=$( mdadm --detail /dev/$EXTERNAL_STRIPING | grep "active sync" | awk '{print $7}' | tr '[0-9]' ' ')

echo "Found external drives: $EXTERNAL"

echo "Remounting the data XFS mirror array $XFS_MIRROR read-only to flush the XFS filesystem"

mount /dev/$XFS_MIRROR -o remount,ro

# mounting read-only may fail, let's sync at least

sync

echo "Removing the external data stripes $EXTERNAL_STRIPING from the data mirror array $DATA_MIRROR"

mdadm /dev/$DATA_MIRROR --fail /dev/$EXTERNAL_STRIPING

sleep 1

mdadm /dev/$DATA_MIRROR --remove /dev/$EXTERNAL_STRIPING

sleep 1

echo "Remounting the XFS mirror array $XFS_MIRROR read-write"

mount /dev/$XFS_MIRROR -o remount,rw

echo "Starting backuppc"

/etc/init.d/backuppc start

# enabling the backup dumps (disabled in the start-backup script)

enable_backup

echo "Stopping the external data stripes array $EXTERNAL_STRIPING"

mdadm --stop /dev/$EXTERNAL_STRIPING

echo "Checking the external backup:"

echo "============================="

TEST_ROOT=/tmp/test_root

mkdir $TEST_ROOT

TEST_DATA_MOUNTPOINT=$TEST_ROOT/mnt/raid

#selecting the first external root partition - ugly quick hack

for EXTERNAL_ROOT_PARTITION in $EXTERNAL_ROOT_PARTITIONS; do

break

done

echo "Mounting the external root fs on $EXTERNAL_ROOT_PARTITION to $TEST_ROOT"

mount -t ext3 /dev/$EXTERNAL_ROOT_PARTITION $TEST_ROOT || quit "ERROR mounting external root fs, incorrect backup, please FIX IT!!" 1

echo "Re-assembling the external stripes array $EXTERNAL_STRIPING"

mdadm -A /dev/$EXTERNAL_STRIPING --scan || quit "ERROR re-assembling the external stripes array, incorrect backup, please FIX IT!!" 2

# we cannot write to the external stripings since that would corrupt their write-intent bitmap. Only read-only mounting allowed!

echo "Mounting read-only the external stripes array $EXTERNAL_STRIPING to $TEST_DATA_MOUNTPOINT"

mount -t xfs -o nouuid,ro /dev/$EXTERNAL_STRIPING $TEST_DATA_MOUNTPOINT || quit "ERROR mounting the backup stripes array, incorrect backup, please FIX IT!!" 3

# checking the filesystem - only checking existence of a particular directory

# NOTE - the TEST_EXISTING_DIR must exist in /mnt/raid!"

TEST_EXISTING_DIR=$TEST_DATA_MOUNTPOINT/backuppc

echo "Checking for existence of specific directory - $TEST_EXISTING_DIR"

echo "Checking if $TEST_EXISTING_DIR exists"

[ -d $TEST_EXISTING_DIR ] || quit "The directory $TEST_EXISTING_DIR should exist but could not be found, incorrect backup, please FIX IT!!" 4

echo

echo "=============================================="

echo "All checks passed, correct backup"

echo "=============================================="

echo

echo "Unmounting the external stripes array $EXTERNAL_STRIPING from $TEST_DATA_MOUNTPOINT"

umount /dev/$EXTERNAL_STRIPING || quit "ERROR unmounting the external stripes array, cannot continue, FIX IT!!" 5

echo "Unmounting the external root fs on $EXTERNAL_ROOT_PARTITION from $TEST_ROOT"

umount /dev/$EXTERNAL_ROOT_PARTITION || quit "ERROR unmounting external root fs, cannot continue, please FIX IT!!" 6

# wait a bit, sometimes the raid throws "busy" error

sleep 5s

echo "Stopping the external stripes array $EXTERNAL_STRIPING"

mdadm --stop /dev/$EXTERNAL_STRIPING

rmdir $TEST_ROOT

# spinning down the external drives

echo "Spinning down the external drives"

for DRIVE in $EXTERNAL; do

hdparm -Y $DRIVE

done

echo "Now you can safely turn off the eSATA bays and remove the drives."

You have put a lot of work into that. Thank you.